This policy brief focuses on short-term action (2026-2028) around AI governance and provides practical guidelines for experts and policymakers. It introduces a framework that embeds democratic pillars — participation, freedom, equality, transparency, knowledge, and the rule of law — directly into the entire AI lifecycle.

There is a way for the European Union to break the United States – China binary when it comes to governing AI.

Artificial Intelligence (AI) development is currently outpacing public deliberation, allowing democratic oversight to slip as power concentrates in the private sector. At the same time, AI poses significant risks and implications for our democratic systems, processes, and agency. With geopolitical competition over AI intensifying, policies should actively reclaim technological innovation for the democratic and public good.

This poses a foundational problem for democratic AI governance: how to address the broad risks of AI to democracy while realising its opportunities for strengthening democratic processes?

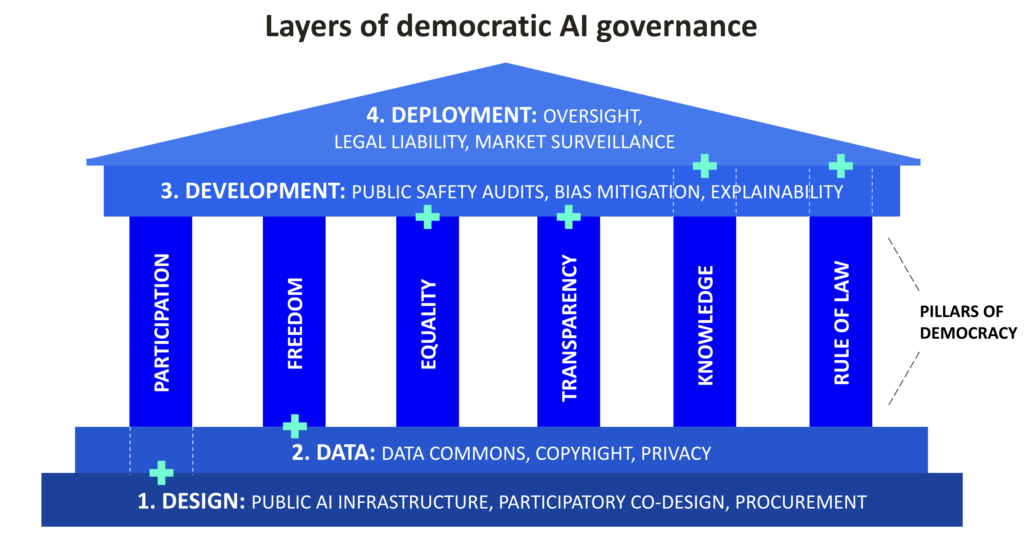

Accepting this challenge, we propose a holistic approach to democratic AI governance. This policy brief introduces a framework that embeds democratic pillars — participation, freedom, equality, transparency, knowledge, and the rule of law — across the entire AI lifecycle. We argue that democratic safeguards cannot wait until an AI system is already deployed and in use. Instead, they must cut across every layer of governance, from the initial design and data collection to the very infrastructure underlying the technology.

The framework is particularly relevant to the European Union, which currently lacks the sovereign AI infrastructure necessary for democratic governance. To implement the framework for democratic AI governance in the EU context, we outline a policy roadmap for European policymakers. The roadmap is organised into a sequence of short-, mid-, and long-term policy actions, which support the goal of AI strengthening democracy by 2035:

Short-term 2026–2028: Enforcing regulation & building public AI infrastructure

This phase focuses on defending and enforcing existing regulations while initiating the build-up of public AI infrastructure. It recognises that democratic AI governance depends not only on rules and standards but also on digital sovereignty across the technical stack, alongside sufficient public-sector capacity to implement, oversee, and enforce regulations in practice.

Mid-term 2029–2032: Democratic adoption

As European AI infrastructure matures, this phase emphasises democratic AI adoption. Democratic safeguards move from isolated requirements to default practices embedded across the AI lifecycle through capable institutions, aligned incentives, and public accountability. Democratic practices at this stage ensure that the public infrastructure and regulatory frameworks are not co-opted by private interests.

Long-term 2033–2035: Exercising AI sovereignty

At this point, public AI infrastructure and democratic practices converge, enabling public oversight of AI. It centres on establishing AI sovereignty, understood as the ability of citizens and public institutions to meaningfully govern critical AI infrastructures, data, and capabilities, rather than remaining dependent on external commercial actors. It is only as public AI infrastructure and democratic participation meet that democratic AI governance becomes possible.

Our research offers 18 concrete policy recommendations, applied over five AI policy tracks, to facilitate the implementation of the framework:

- regulatory enforcement

- public AI infrastructure

- investments and innovation

- AI literacy

- research and standards

The recommendations focus on short-term action (2026-2028) and provide practical guidelines for experts and policymakers on democratic AI governance.

The policy brief is based on research conducted in the KT4D project (2023-2026, Grant Agreement No. 101094302).

KT4D: Fostering democracy through knowledge technologies

Project

November 21, 2023

AI governance to avoid bias and discrimination

Post

June 22, 2021

Policies against algorithmic discrimination still lacking

Post

September 22, 2021

AI 4.0: Finland as a leader in the twin transition

Project

November 21, 2022

Avoiding AI biases: A Finnish assessment framework for non-discriminatory AI systems

Project

May 12, 2021

Impact of algorithmic management on workers

Project

September 23, 2024

New research aims to define what “successful AI” means in society (FORSEE)

Project

April 17, 2025

REPAIR and renewal of algorithmic systems

Project

April 7, 2023

An assessment framework for non-discriminatory AI

Publication

August 23, 2022

Technology for society

Theme

December 11, 2025