Artificial intelligence (AI) can enable great societal benefits by allowing for more efficient and personalized services. Indeed, automated decision-making systems based on machine learning algorithms are increasingly utilized in essential public or private services like recruitment, education, finance, health care, advertising, and policing. However, this can also give rise to…

Artificial intelligence (AI) can enable great societal benefits by allowing for more efficient and personalized services. Indeed, automated decision-making systems based on machine learning algorithms are increasingly utilized in essential public or private services like recruitment, education, finance, health care, advertising, and policing. However, this can also give rise to algorithmic bias: systematic discrimination against certain groups. As machine learning relies on pattern recognition across large datasets, it can automate intersectional bias against marginalized communities. Algorithmic decision-making can thereby inconspicuously maintain or even exacerbate historical racial and gender inequalities. Such harms have been recorded in numerous AI systems so far, ranging from recruiting systems that favor men to health-care algorithms that discriminate against people of color.

Algorithmic bias can arise from:

1.Data

Data is not neutral and always reflects existing power relations in a society. Algorithms trained on historical data can replicate and potentially reinforce existing social inequalities, even if protected attributes are excluded from the data. This happens because AI systems learn to use ‘proxy variables’ —such as postcodes— to replicate historic biases.

2. Algorithmic design and processing

The algorithms themselves can also be biased and alleviated by different debiasing techniques and organizational practices like diversity and participatory design. A lack of transparency and explainability of AI systems is an additional challenge. Discrimination is unlikely to be identified and mitigated if AI systems remain opaque black boxes whose decision-making logic is not understood.

3. Model deployment in practice

Implementation and use cases of AI in different societal contexts can have major ethical implications, especially if a system is deployed in a context where it’s not intended to be used. AI should therefore be seen as socio-technical systems that require societal debate about their acceptability and possible risks, especially with the local communities that stand to be most affected.

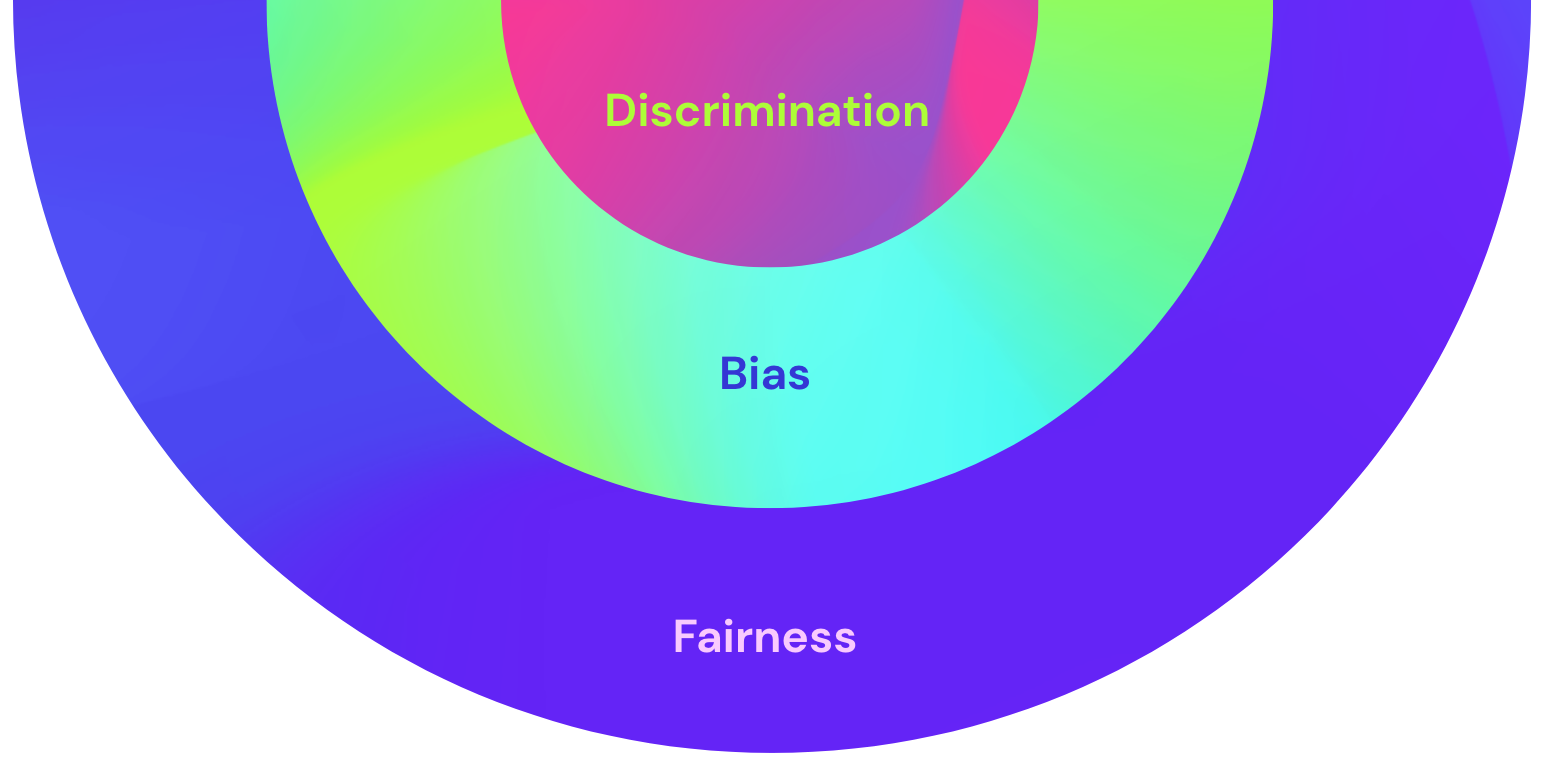

From bias to discrimination

In the discourse, algorithmic bias and fairness are broader terms that have their basis on AI ethics and technical machine learning, including different bias mitigation techniques and fairness metrics. On the other hand, algorithmic discrimination and equality are specific legal terms that refer to unjustified treatment of protected individuals or groups either explicitly or implicitly. Such protected characteristics include sex, race or ethnic origin, disability, sexual orientation, religion or belief, and age.

Adopted from Centre for Data Ethics and Innovation (2020).

Algorithmic discrimination is especially problematic because legal systems are lacking behind in their capacity to address such an emerging phenomenon. As Janneke Gerards and Raphaële Xenidis (2021) have showcased, the EU non-discrimination law faces many challenges with algorithmic discrimination:

- Intersectional discrimination: Algorithmic profiling can lead to intersectional discrimination across a number of characteristics due to proxy variables, yet the EU law only considers discrimination on the basis of specific protected grounds.

- Indirect discrimination: While most algorithmic discrimination is likely to be indirect, assessing it is contextual and open-ended in practice. One needs to prove that apparently neutral rule disproportionately disadvantages a protected group. Moreover, indirect discrimination can be justified if it fulfills an objective and reasonable goal, such as the accuracy of the algorithm.

- Legal gaps in EU law: While machine learning is widely used in goods and services, advertising, and finance, the EU equality law only applies partly to these in terms of protected grounds.

- Enforcement: Establishing prima facie evidence of differential treatment and discrimination is difficult due to the lack of transparency and explainability in AI. Allocating responsibility and liability for algorithmic discrimination is also challenging due to the complexity and overlap of different AI systems and the number of actors involved (developers, deployers, and users).

Positive framing

The fundamental aim of EU non-discrimination law is arguably not only to prevent ongoing discrimination but also to achieve substantive rather than merely formal equality. Substantive equality means (1) recognizing that the societal status quo is not neutral and (2) leveling the playing field by creating fair procedures to overcome the historical inequalities against marginalized groups in society. Similarly, the Finnish Non-Discrimination Act obligates public authorities, educational institutions, and employers to promote the realization of equality.

AI can therefore also be envisioned as a tool against discrimination by:

- monitoring, diagnosis, and testing to identify biases in current decision-making

- different bias mitigation and debiasing techniques to prevent discrimination

- more consistent and transparent decision-making through algorithms

This might also affect the fairness metrics one chooses to employ in machine learning algorithms. For example, Sandra Wachter, Brent Mittelstadt and Chris Russell (2021) propose using bias transforming metrics that consider pre-existing biases and historical inequalities. Data Feminism (2020) by Catherine D’Ignazio and Lauren F. Klein make similar calls for proactively tackling inequalities, examining and challenging unequal power structures in datafied society.

Call for AI governance

The above highlights the need for public sector standard-setting, assessment frameworks, and auditing to ensure fair and inclusive AI systems. Algorithmic impact assessment frameworks are starting to arise internationally. They are one solution to realizing fairness in AI development and governance, but, so far, only with a limited focus on equality and non-discrimination. Such frameworks should encompass both negative risks of discrimination as well as positive impacts of AI solutions on advancing equality. Somewhat worryingly, while the EU’s new regulatory framework for high-risk AI systems requires risk assessment, representative datasets, and traceability, it does not explicitly refer to non-discrimination or equality.

All of this underlines the need for a multidisciplinary, socio-technical approach to AI governance. AI governance must integrate technical machine learning with ethics, sociology, and law. As bias doesn’t merely lie in data, one needs to go beyond mathematical models of fairness. There is a greater need to look at the social context, diversity, and power structures around AI: whose values are embedded in these systems, who is oppressed, and who profits from them. As algorithms affect society at large, public deliberation and participatory design about the associated inequalities are essential for democratizing algorithmic fairness.

Demos Helsinki will continue to work on fair and inclusive governance of emerging technologies in our ongoing national (Avoiding AI biases) and European (TOKEN and ATARCA) research projects, as well as upcoming projects.

Photo by Joakim Honkasalo on Unsplash

Avoiding AI biases: A Finnish assessment framework for non-discriminatory AI systems

Project

May 12, 2021

TOKEN: The Transformative Impact of Distributed Technologies in Public Services

Project

October 13, 2020

ATARCA: Scientific Foundation for Anti-Rival Compensation and Governance Technology

Project

June 11, 2021

Let’s rethink: A vision for digital platforms

Post

January 19, 2022

An assessment framework for non-discriminatory AI

Publication

August 23, 2022

ARISE – Human-centric, agile, and ethical human-robot interaction (HRI)

Project

September 9, 2024

Policy Brief: Towards a regenerative digital economy

Publication

June 20, 2023

Technology for society

Theme

December 11, 2025

A framework for democratic AI governance

Publication

February 9, 2026